Listen to this Museletter

The Future of Data Centres in the AI Era

In the early 20th century, highways transformed the movement of goods and people, driving growth and urbanisation. As cities expanded, these highways needed faster, more efficient solutions. Today, data centers—once built for cloud and enterprise workloads—are the highways of the AI era. But these highways can no longer keep up with AI’s scale, complexity, and real-time demands. The next evolution isn’t about adding lanes. It’s about rethinking infrastructure to be dynamic, adaptive, and intelligent.

Key Takeaways Shaping the Future of Data Centres

S

Specialised systems are redefining compute economics—AI-native racks demand 100kW+, pushing the limits of silicon, interconnects, and performance-per-watt.

M

Modular builds enable sub-14-month deployments—driving scalable, repeatable, and location-agnostic infrastructure for AI-native growth.

A

Autonomous operations are real—embedded AI is now the control layer, running infrastructure, optimising costs, and managing failures without human input.

R

Resilient fabrics blend hyperscale and edge—training at scale, inferring at the edge, and orchestrating workloads across geography, latency, and regulation.

T

Thermal and energy breakthroughs are non-negotiable—liquid cooling, heat reuse, and grid-aware scheduling are redefining efficiency and sustainability.

In this edition of The Agna Museletter, we explore the technologies and trends redefining data center infrastructure—making it smarter, faster, and greener to power the AI-driven future.

Museletter Highlights:

- Agna Insights

Explore how AI is reshaping data centres into intelligent, efficient, and sustainable ecosystems - Agna Perspectives

Interesting and insightful reads from our social media - On the ‘Front’ier Tech

Latest updates on Frontier Technologies - Agna Recommends

Team Agna’s curated recommendations for you

Agna Insights

From Racks to Reason:

The Rise of SMART Infrastructure for the DeepTech Era

Beneath every click, prompt, and prediction lies a silent backbone— the Foundational Infrastructure Stack—Storage ↔ Compute ↔ Network, all powered by Energy, quietly shouldering the intelligence revolution. Realised in the form of data centres and interconnected systems, this stack was built for an earlier era—one of static workloads and predictable demands. But we’re now in the throes of an intelligence revolution, and infrastructure must evolve. It can no longer merely support intelligence—it must become intelligent.

Emerging technologies—AI, Blockchain, Quantum Computing, Immersive Reality—are converging and transforming industries at an unprecedented pace. These systems are compute-intensive, latency-sensitive, bandwidth-hungry, and energy-dependent, exposing deep limitations in the infrastructure they rely on.

This evolution isn’t just technical—it’s deeply human. At our core, we are endlessly curious beings, driven by an innate desire to solve what seems unsolvable. If the future is Agentic—defined by the rise of autonomous AI agents acting independently across systems—then our infrastructure must match this agency with adaptability. These Agentic systems operate with goals, learn in real time, and increasingly require infrastructure that is responsive, predictive, and self-governing.

This marks a new kind of arms race—not just for technological superiority, but for infrastructure that can keep up with the intelligence it hosts. The demand for intelligent infrastructure will always outpace supply. It is a reflection of our drive to seek, explore, and evolve. And if we don’t rethink how we build and scale, this perpetual pursuit will either strain planetary boundaries or catalyse a new class of infrastructure—hyper-intelligent, adaptive, and resource-aware.

We’re also approaching a financial inflection point. For the last two decades, data infrastructure remained the realm of hyperscalers and specialists. But today, the narrative is shifting. In just a few years, every accredited investor—and eventually every market participant—will be directly or indirectly exposed to the data and compute economy. It’s the next frontier for alternative investments and the foundation on which the AI economy will stand.

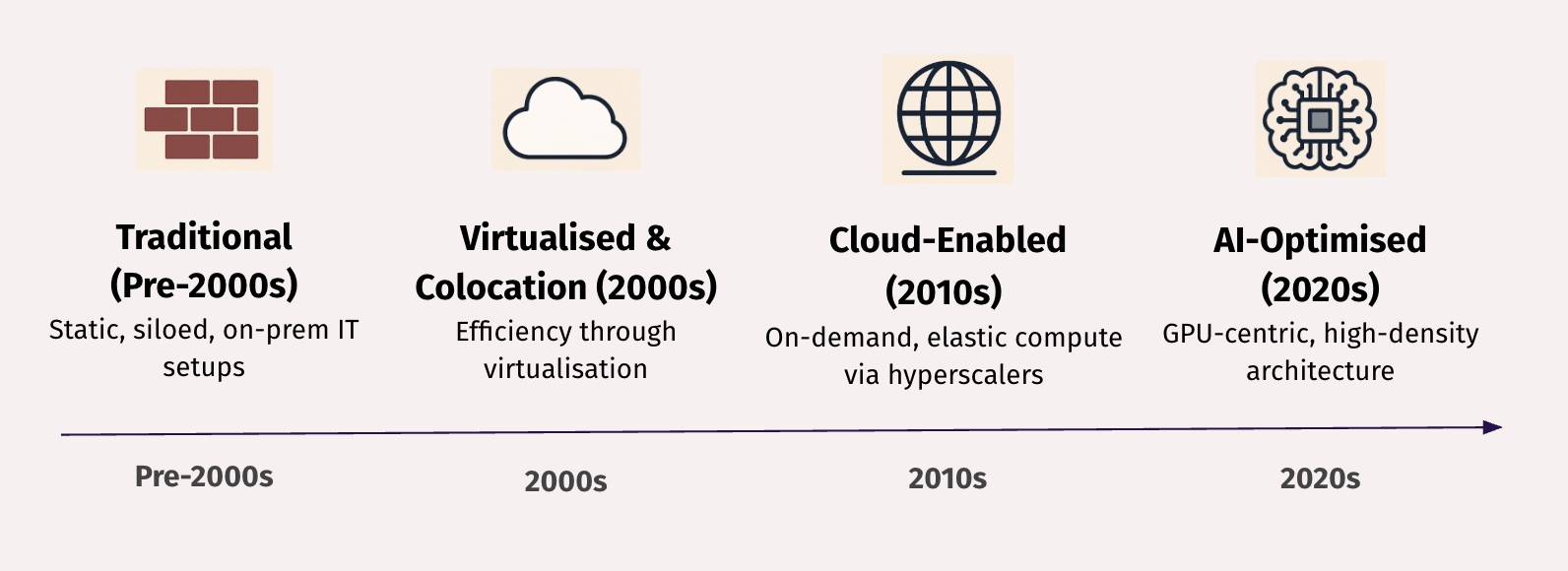

The historical evolution of infrastructure tells this story:

- Traditional (Pre-2000s): Static, siloed, on-prem IT setups

- Virtualised & Colocation (2000s): Efficiency through virtualisation

- Cloud-Enabled (2010s): On-demand, elastic compute via hyperscalers

- AI-Optimised (2020s): GPU-centric, high-density architecture

These infrastructure systems were the latest during the respective eras—but are now insufficient. They weren’t designed for the scale, speed, efficiency, or autonomy demanded by frontier technologies.

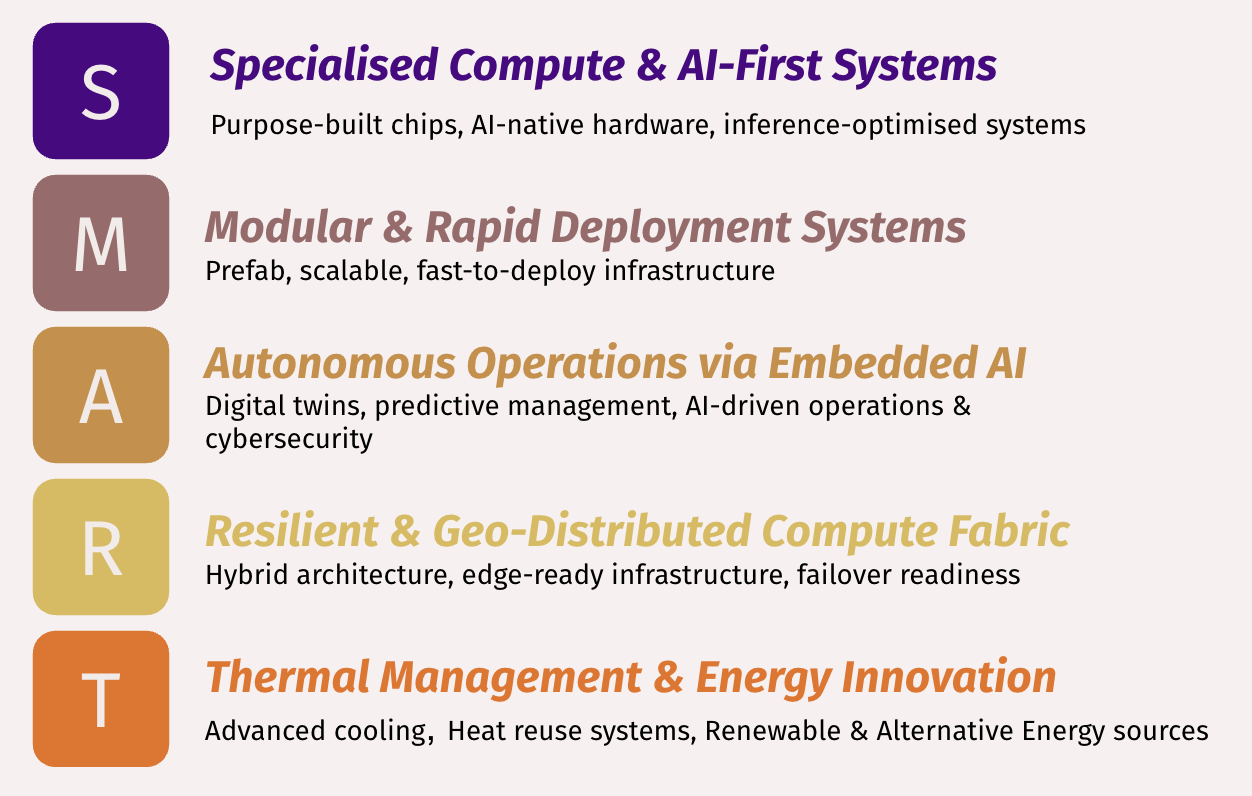

At Agna, we believe the next leap is toward SMART Infrastructure—a future-ready generation of systems designed for the age of AI and beyond:

The S.M.A.R.T Infrastructure for the DeepTech Era

In the DeepTech era, infrastructure can’t just support intelligence—it must become intelligent. In short, we are moving from traditional IT infrastructure to systems that adapt, learn, and operate autonomously—mirroring the intelligence they support.

I. Specialised Compute & AI-First Systems

The rise of AI workloads has brought a seismic shift in compute demand. Legacy data centres typically operate within a 5–10kW per rack power envelope. In contrast, AI-native workloads often demand 60–100kW+ per rack, driven by large-scale model training and inference tasks.

This evolution has led to a fundamental transformation in data centre design. Compute per square meter is rapidly increasing, as fewer but far more powerful racks are being deployed in highly optimised, space-efficient configurations. At the same time, these systems are pushing energy density to unprecedented levels, creating infrastructure that demands more power per rack than ever before.

As a result, the industry is confronting a new challenge: compute per unit of energy (e.g., per kW or MW). While the long-term goal is to increase energy efficiency — delivering more compute per watt — in practice, the sheer scale and complexity of today’s AI models are outpacing efficiency gains, resulting in rising total energy consumption. This has intensified the race among chipmakers and system designers to optimise performance-per-watt through breakthroughs in silicon architecture, memory bandwidth, cooling, and system integration.

To meet these demands, companies like Cerebras, Groq, SambaNova, and NVIDIA are developing purpose-built AI hardware with massive parallelism, high-bandwidth memory, and efficient interconnects like NVLink and CXL. Modular AI systems such as NVIDIA DGX and Meta’s Grand Teton are redefining infrastructure blueprints around these energy-aware, performance-intensive chips.

Edge inference is also becoming a critical part of this fabric, demanding latency-optimised, network-aware topologies that balance speed with energy constraints at the edge.

Meanwhile, advancements in networking silicon and photonics are enhancing node-to-node connectivity. Companies like Broadcom and NVIDIA are introducing low-power, high-throughput networking chips and integrating silicon photonics to accelerate data movement while reducing energy draw — a vital enabler for scaling AI workloads sustainably across hyperscale fabrics.

II. Modular & Rapid Deployment Systems

To meet the explosive demand for AI infrastructure, data centres must now be built faster, greener, and with greater flexibility. Modular and prefabricated systems are enabling this transformation—allowing the deployment of tens of megawatts in under 14 months, even in remote or resource-constrained locations.

Companies like Huawei, Schneider Electric, Vertiv, and STACK Infrastructure are leading this modular revolution. Their end-to-end systems—covering power, cooling, data halls, and shared facilities—enable just-in-time deployments that are both scalable and location-agnostic. These solutions offer consistent performance, reduce construction waste, and accelerate time-to-value, while also supporting customisation for AI-specific workloads.

Prefabricated and plug-and-play modules simplify global replication and remove dependence on on-site skilled labor, making them ideal for both hyperscale expansions and edge or disaster recovery deployments.

Emerging innovations like containerised edge nodes and self-contained micro data centres are enabling decentralised, on-demand compute—bringing AI capabilities closer to where data is generated. Meanwhile, open standard initiatives like the Open Compute Project (OCP) are driving hardware interoperability and fostering a scalable, modular ecosystem.As the industry shifts toward AI-native buildouts, modular infrastructure is no longer a niche solution—it’s quickly becoming the standard paradigm for rapid, sustainable deployment.

III. Autonomous Operations via Embedded AI

AI is no longer just a workload—it’s fast becoming the operational brain of infrastructure. McKinsey estimates that AI-driven energy management can reduce data centre operating costs by 20–30%. This is where the concept of intelligent infrastructure truly comes to life—where AI is not just a tenant of the data centre but also its operational brain.

Leaders like NVIDIA and Schneider Electric are pioneering digital twins of AI data centres, enabling operators to simulate and optimise power flows, cooling loads, and thermal performance in real time. These virtual replicas support predictive modeling, scenario planning, and autonomous decision-making across facility operations. For instance, Google has implemented DeepMind’s AI to autonomously manage cooling systems in its data centres, resulting in a 30% reduction in energy used for cooling.

AI-powered FinOps tools are also reshaping how resources are allocated, with dynamic provisioning models that can cut cloud migration and infrastructure costs by up to 25%. Platforms like Equinix SmartView offer real-time infrastructure monitoring, while Microsoft’s Project Silica explores ultra-durable storage that can withstand extreme conditions, pointing to a future where data centres are not only autonomous but also sustainable and resilient.

Robotics powered by AI diagnostics are taking on infrastructure maintenance, enabling hands-free inspections and repairs. Boston Dynamics’ Spot robot, for example, is already deployed in some data centres, conducting thermal inspections and identifying anomalies in server rooms—reducing the need for human intervention.

These innovations are paving the way for fully autonomous, zero-trust data centres. AI agents are being developed to monitor, optimise, and govern the entire facility ecosystem—transforming traditional infrastructure into proactive, adaptive, and self-governing systems.

Looking ahead, autonomous data centres will feature:

- AI agents coordinating across multi-region data centres to shift workloads based on energy availability, latency, and environmental impact

- Integrated robotics for facility maintenance, leveraging AI for diagnostics and hands-free repairs

- AI-native cybersecurity agents capable of detecting, isolating, and neutralising threats without human intervention.

- Sovereignty-aware agents ensuring data locality and enforcing regional compliance boundaries.

- AI-powered procurement and sustainability tracking, where sourcing decisions factor real-time carbon impact and grid conditions.

- Grid negotiation, where agents engage with utility markets to sell back unused capacity or reduce load on request.

- Fully closed-loop systems where every physical subsystem—from power and cooling to security and network—responds autonomously to environmental and workload changes.

These innovations are redefining infrastructure from being reactive and siloed to being proactive, adaptive, and context-aware—laying the foundation for a future where the data centre is not just smart but self-governing.

IV. Resilient & Geo-Distributed Compute Fabric

As foundation models grow in complexity and size, hyperscale training must be paired with real-time, edge-based inference. This hybrid model is becoming the new norm.

Industry giants like Amazon, Google, and Microsoft are scaling their hyperscale infrastructure, while companies like Equinix and Digital Realty expand edge data centre footprints. Microsoft Azure Edge Zones and NVIDIA’s modular AI supercomputers bring compute closer to the source of data. Gartner projects that 75% of enterprise-generated data will be processed outside centralised data centres by 2025—driving the need for real-time workload orchestration, fault tolerance, and latency-aware compute deployment.

To ensure true resilience, this distributed architecture must include redundant fiber paths, multi-cloud interoperability, and sovereignty-aware deployment strategies. Compliance with local data regulations and the ability to reroute workloads dynamically are becoming baseline expectations for intelligent infrastructure.

V. Thermal Management & Energy Innovation

AI workloads are intensely power-hungry, and thermal management is fast becoming a critical bottleneck. Cooling alone accounts for over 20% of data centre energy consumption. As global AI adoption accelerates, the International Energy Agency (IEA) warns that electricity demand from data centre infrastructure could double by 2026, surpassing 1,000 terawatt-hours—equivalent to Japan’s annual energy use.

To address this, next-gen thermal solutions such as immersion and liquid cooling, heat exchangers, and cooling distribution units (CDUs) are moving into the mainstream. Companies like Vertiv, Schneider Electric (via Motivair), and Iceotope are leading innovation. Iceotope’s precision immersion cooling reduces water usage by up to 96% and energy consumption by nearly 40%. Meanwhile, heat reuse systems are transforming waste into value—atNorth’s Stockholm facility redirects over 85% of server heat to power local schools, pools, and homes through district heating networks.

On the energy front, infrastructure is rapidly shifting toward cleaner and more distributed power sources. Google’s partnership with Kairos Power aims to pilot Small Modular Reactors (SMRs) capable of delivering 500MW of clean electricity for its data centres by 2030. Amazon is pursuing similar nuclear innovations. In parallel, solar + battery solutions are proving vital for powering data centres in underserved regions like Nigeria, where stable grid access is limited.

Importantly, AI workloads differ from traditional IT—they’re often more flexible in scheduling, particularly for training and inference jobs that aren’t latency-sensitive. This flexibility is unlocking new efficiencies:

- Aligning low-priority workloads with off-peak hours or renewable energy availability

- Reducing carbon impact while optimising energy costs and space utilisation

- Shifting jobs dynamically based on real-time grid conditions

To support this, AI-driven, grid-aware load-balancing systems are gaining traction, along with emerging energy storage technologies like solid-state batteries and hydrogen fuel cells—all contributing to a new era of energy-intelligent infrastructure.

Together, these advances in cooling, power, and intelligent scheduling are transforming data centres from energy-intensive facilities into adaptive, sustainable systems built to support the scale and complexity of the AI era.

Conclusion:

The shift to SMART infrastructure is not optional—it’s inevitable and a strategic imperative. The winners of the DeepTech era will not just be those who build smarter models but those who build, fund, and operate SMARTer systems: infrastructure that is powerful, distributed, sustainable, and adaptive.

Agna Perspective

- Read why Indian companies are flocking to Dubai, driven by CEPA, business-friendly free zones, and a robust logistics network enabling global access. With swift registration, tax perks, and strong government backing, fintech, AI, and SaaS startups are seizing expansion opportunities.

- Read how China’s Zuchongzhi 3.0 quantum processor outpaced Google’s Sycamore by a million-fold, solving in seconds what would take supercomputers 6.4 billion years. While it marks a major leap in quantum supremacy, challenges in error correction and real-world applications remain.

- Did you know that women remain vastly underrepresented in AI and DeepTech, receiving just 9% of VC funding in the Middle East and under 4% in Asia?

On the ‘Front’ier Tech:

- India’s data centre industry saw $6.5 billion in investment (2014–2024), with capacity growing 139% to 1.4 GW, driven by rising internet penetration and data consumption. Mumbai and Chennai lead with 70% capacity, while edge data centres are emerging in Tier 2/3 cities to support Gen AI and latency-sensitive applications.

- A bitcoin mine in remote Zambia, powered by cheap hydroelectric energy from the Zengamina plant, has helped sustain local power needs while generating profits for Gridless. As the plant expands and connects to the national grid, Gridless plans to replicate this model across Africa by building its own hydro-plants to power rural communities and mine bitcoin.

- Goldman Sachs Research predicts a 165% rise in global data center power demand by 2030, driven by AI growth, with hyperscalers and data center operators poised to benefit. However, power grid constraints and slower AI adoption could moderate this growth.

Agna Recommends:

Listen to an episode featuring Adam Haynes from SimpleMining.io, discussing Bitcoin mining fundamentals, energy innovations, and advanced technologies like AI and quantum computing.

The Big Switch: Rewiring the World, from Edison to Google by Nicholas Carr (2008)

Examines the rise of cloud computing and how data centers have become the backbone of modern digital infrastructure, which now powers AI and Bitcoin mining.

SemiAnalysis’ Jeremie Eliahou Ontiveros on AI and Data Centers

A podcast where technology analyst Jeremie Eliahou Ontiveros discusses the supply and demand dynamics of AI and data centers.

Listen to this Museletter

Questions? Feedback? Different perspective?

We invite you to engage with us and collaborate.

Warm Regards,

Team Agna

Click below to join our mailing list for The Agna Museletter.